Groundbreaking progress in the EU - with significant new risks for US providers and market leaders

After three days of intensive negotiations, the EU has taken a historic step by agreeing on the "AI Act", a comprehensive set of rules for artificial intelligence (AI). This law aims to ensure that AI systems placed on the European market and used in the EU are safe and respect fundamental rights and EU values. It not only represents a milestone for the future of our societies and economies, but also aims to stimulate investment and innovation in the field of AI in Europe. However, if you look at the regulations in detail, the question arises - especially with regard to the transparency regulations - as to whether this will really happen with this new law:

Transparency can become a major problem for generative basic systems

The regulation of general-purpose AI (GPAI) systems (such as ChatGPT or Bard) has now been included in a significant part of the "AI Act" - following intensive discussion. Given the wide range of tasks that AI systems can perform and the rapid expansion of their capabilities, it was agreed that these GPAI systems and the models based on them must meet certain transparency requirements. These requirements were originally proposed by the European Parliament and now include several important aspects:

- Technical documentation: GPAI systems must produce comprehensive technical documentation. This documentation should provide detailed information on the functionality, development and use of the AI systems.

- Compliance with EU copyright law: The systems must demonstrate compliance with EU copyright law when using training data. This ensures that the development of AI technologies takes place in compliance with existing copyrights.

- Detailed summaries of the training content: GPAI systems must provide detailed summaries of the content used for training. This increases transparency and enables a better understanding of the basis on which the AI systems make their decisions.

- Adversarial testing: This involves testing the models under difficult conditions to ensure their robustness and reliability.

- Reporting of serious incidents: Operators must report to the Commission on serious incidents related to the use of the AI models.

- Cybersecurity: A high standard of cybersecurity is mandatory for these models.

- Reporting on energy efficiency: The models must also report on their energy efficiency, which is important in the context of the sustainability and environmental friendliness of AI technology.

The introduction of the transparency requirements in the AI Act poses a huge risk for leading AI systems such as ChatGPT and Bard. These systems, especially ChatGPT 4 with its 1.76 trillion training parameters, have been trained with a huge, almost unmanageable amount of data. All without the involvement of the originators or even a single permission or license to use this data for training.

The new legislation reverses the previous practice: Instead of authors having to laboriously prove copyright infringements, the responsibility now lies with companies such as OpenAI to disclose their training data. This will bring to light an incredible amount of previously unknown copyright infringements.

With the established transparency obligation, OpenAI is now directly confronted with a potential flood of lawsuits. This challenge has the potential to put the company in a threatening position, both legally and economically. The future will show how OpenAI copes with this unprecedented situation.

The other main elements of the provisional agreement:

- Classification of AI systems: A horizontal layer of protection, including a high-risk classification, should ensure that AI systems that do not pose serious fundamental rights violations or other significant risks are not covered.

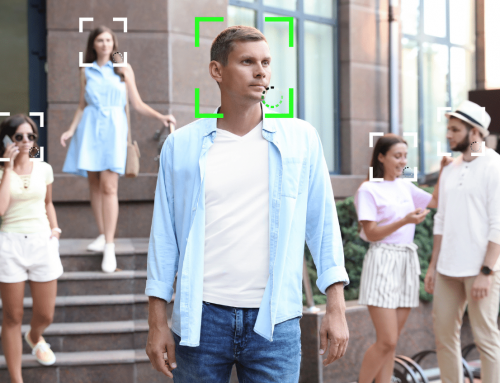

- Prohibited AI practices: Some AI applications are considered unacceptably risky and therefore banned in the EU, including cognitive behavioral manipulation and emotion recognition in the workplace.

- Exceptions for law enforcement agencies: Under certain conditions, law enforcement agencies may use real-time biometric recognition systems in publicly accessible spaces.

- General purpose AI systems and basic models: New provisions have been added to address situations where AI systems can be used for many different purposes. These are now covered by the AI Act, which was one of the most controversial points in the legislative process,

- A new governance architecture: an AI office will be set up within the Commission to monitor the most advanced AI models and enforce the common rules across all Member States.

- Transparency and protection of fundamental rights: Before launching a high-risk AI system on the market, providers must carry out an assessment of the impact on fundamental rights. The system here is based on the data protection impact assessment in accordance with Art. 35 of the GDPR.

- Measures to promote innovation: The provisional compromise provides for a series of measures to create an innovation-friendly legal environment and promote regulatory evidence-based regulation.

Next steps

Following the provisional agreement, the regulation will be formulated in detail and finalized in the coming weeks. This overall text will then be adopted after a final vote, so that implementation can be expected in the course of this year.

Conclusion

The EU's "AI Act" is a pioneering step towards the safe, responsible and fundamental rights-compliant use of AI. With this law, the EU is positioning itself as a pioneer in the global debate on the regulation of artificial intelligence, pursuing a balanced approach between the promotion of innovation and the protection of civil rights. This law could serve as a model for other countries and thus promote the European approach to technology regulation on the global stage.