Picture: Iryna Imago / shutterstock.com

Interrogation by the chatbot? How the police tracked down a child molester

A case from the USA is currently causing a stir: Investigators were able to unmask a suspected operator of several darknet forums - not through traditional police work, but with the help of ChatGPT. More precisely: through the questions he asked on the platform. In an unprecedented move, the US Department of Homeland Security (DHS) requested user data directly from OpenAI - including all of the suspect's conversations with ChatGPT.

With ChatGPT protocol for prosecution

As reported by heise online, the breakthrough came when an undercover investigator spoke to the suspect in one of the forums. He also talked about ChatGPT, reported on personal chats and even named some of his prompts. What sounded harmless was ultimately the key: the investigators used the content to obtain the associated data from OpenAI via a court order.

Not only did the searched prompt entries exist there, they could also be linked to personal data such as name, address and payment information. This enabled the authorities to clearly identify the man - he was a 36-year-old former employee of a US military base in Germany.

ChatGPT becomes the digital DNA

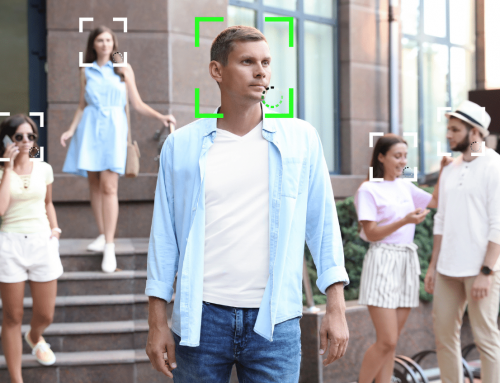

What makes this case particularly controversial is that it is the first time that investigators have used so-called "reverse prompt requests". This is no longer about what someone is searching for - as with Google - but what someone types into an AI chatbot. The input acts like a digital fingerprint. Everyone has their own way of asking, writing and thinking. And that is exactly what is now being analyzed.

According to the court order, OpenAI had to provide the data - and did so, apparently in the form of an Excel spreadsheet. Explosive: even if this data was not decisive for the arrest, the case shows that chatbot conversations can now be part of digital evidence.

What does that mean for you?

The case is rightly causing concern: anyone who regularly uses ChatGPT could unknowingly leave a digital trail that can be used against them if the worst comes to the worst. Even if most people have nothing to hide, the thought of their own questions being analyzed by an AI bot, stored and handed over to the authorities in case of doubt is unpleasant.

Heise online quotes criminal law expert Jens Ferner, who puts it in a nutshell: "The entire behavior can be evaluated and an image of the personality can be created using AI." This is a surveillance potential that comes close to DNA traces or even digital profiling.

Critical classification

As is so often the case, the digital trail on the Internet has served well in solving the most serious crimes and hardly anyone will criticize the procedure in this case. Nevertheless, an uneasy feeling creeps in when one becomes aware of the procedure and the scope of what is technically possible. Because this case shows: Interactions with artificial intelligence are no longer as private as many people think.

Prompt entries as digital fingerprints? Personality profiles at the touch of a button? If even creative questions to an AI chatbot can ultimately end up in the chain of evidence, it becomes clear that we are heading straight into an era of algorithmic screening.

And this is where the real discussion begins. It's not about protecting perpetrators - it's about clarifying the rules before no one knows where privacy ends and surveillance begins.